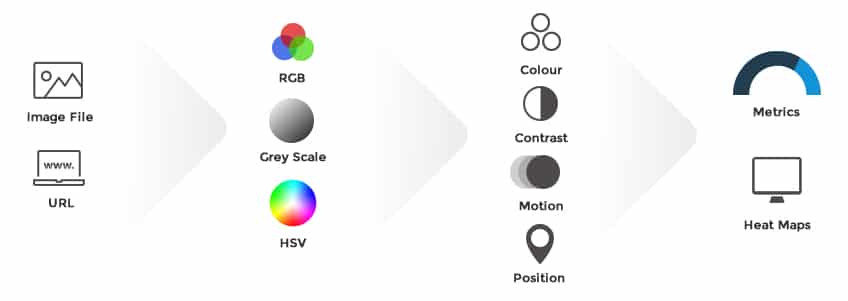

Our model was designed from the ground up taking account of the physiology and psychology of vision as well as the application of design. Based on extensive, published data along with in-house research we refined attention theory for web design and identified key features and visual stimuli.

Our computer vision package, developed using the powerful OpenCV library, was then constructed to extract and grade these features.